The operator of a coal-fired power plant must manage a large number of parameters to keep the unit operating safely, reliably and efficiently. Maximizing power output and combustion efficiency whilst minimizing emissions of pollutants are the key tasks. One important component of combustion efficiency is the sensible heat loss in the stack gases – basically, the amount of hot air exiting the process. Reducing the amount of heat loss will improve efficiency, so it is generally desirable to minimize the stack gas temperature. Reducing the stack gas temperature by 10 °C (18 °F) results in a nearly 0.5% increase in combustion efficiency.

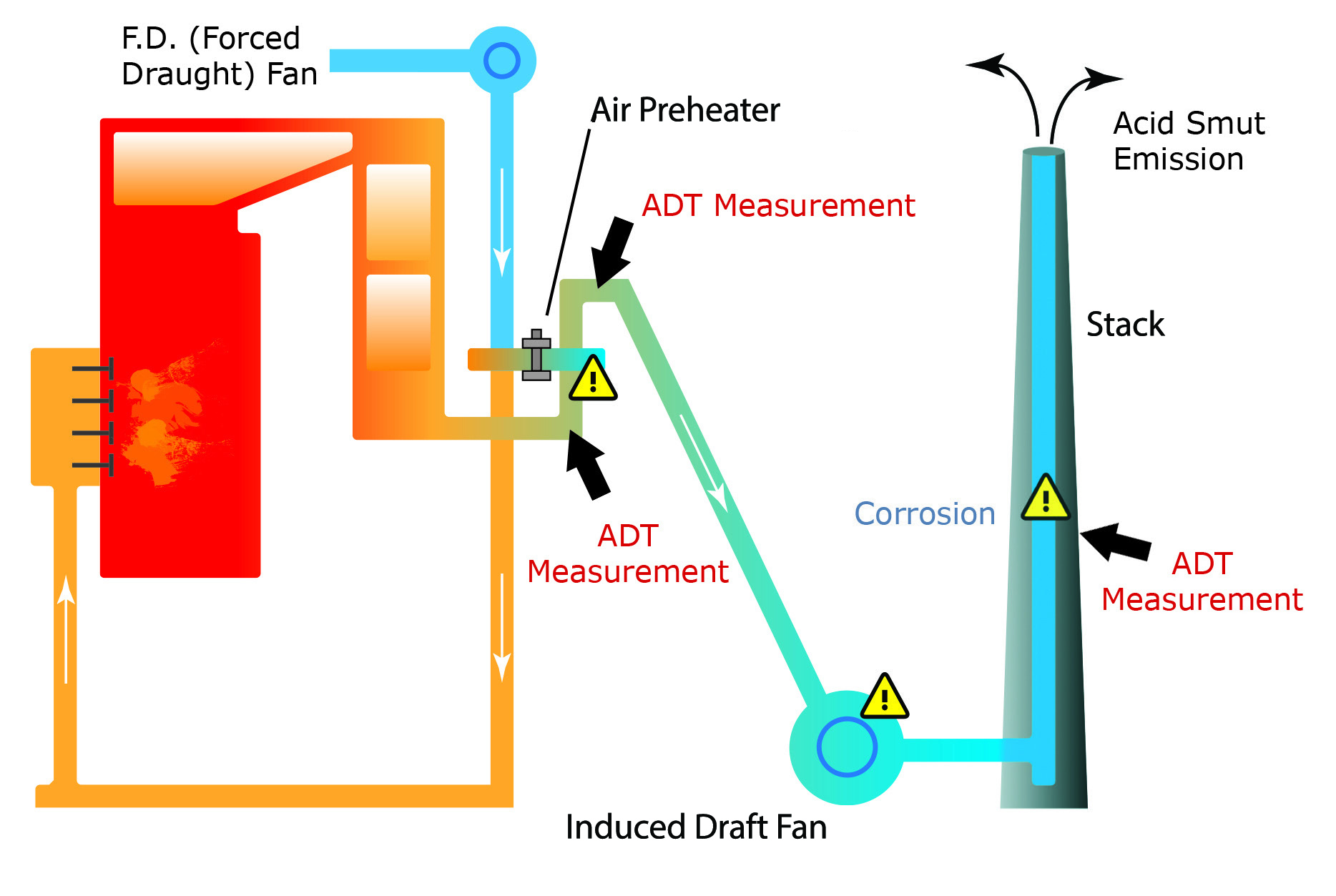

The minimum practical temperature is set by the acid dewpoint temperature (ADT). This is the temperature at which sulfuric acid condenses from the vapour to the liquid phase. If flue gases are allowed to cool below the ADT, the result will be the condensation of hot sulfuric acid on the surfaces of the air preheater, induced draft fan, stack and associated ductwork with inevitable, and expensive, corrosion.

Measuring the ADT at different points within the process allows the operator to determine the minimum process temperature which maximizes efficiency whilst maintaining a safe margin above the ADT. The Lancom 200 portable acid dewpoint temperature monitor is ideal for this purpose. Available with probe lengths from 1.2 m (4 ft) to 3.0 m (10 ft), it is powered by rechargeable batteries and requires only a supply of compressed air to cool the sensor.